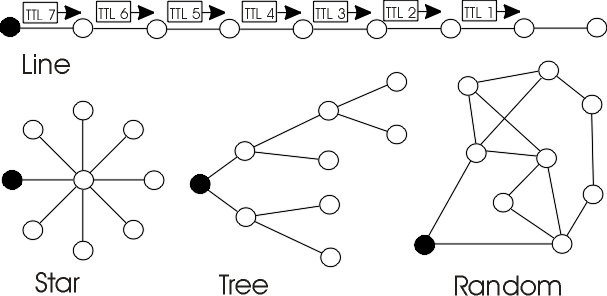

Figure 15: Topologies on Local Area Network: master node is black.

Figure 15: Topologies on Local Area Network: master node is black.

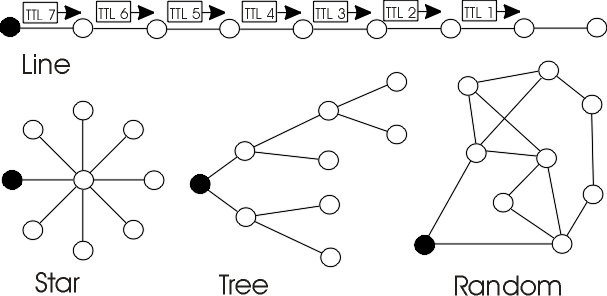

In the star topology, all computers mimic the centralized model. However, we used a branch computer as master. All computers received their job, but the central computer had trouble sending back results. Linear speedup was not achieved because the central computer was overloaded and lost some packets.

Figure 16: Speedup for the star topology

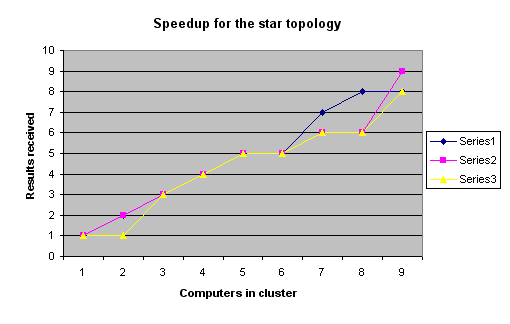

Figure 17: Speedup for the line topology

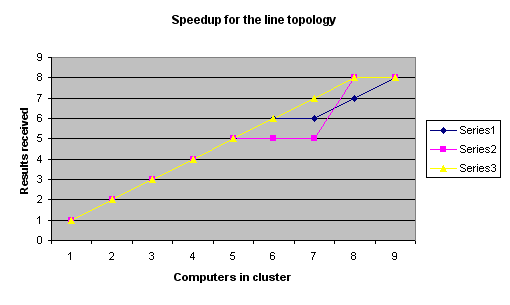

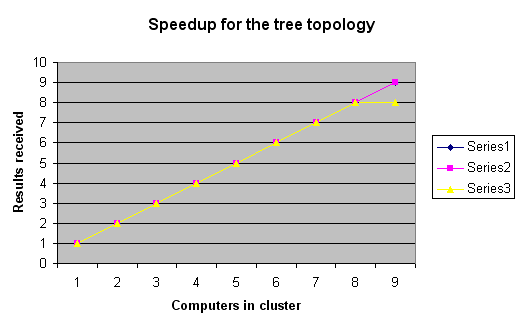

Figure 18: Speedup for the tree topology

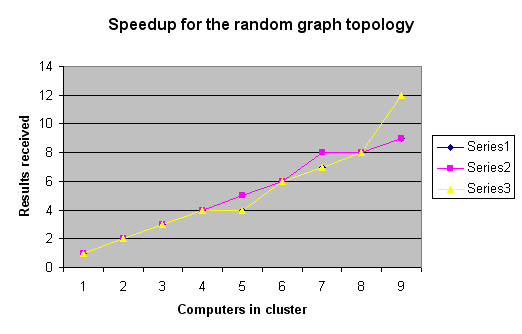

Figure 19: Speedup for the random graph topology

Results for the random graph topology show that GPU is still not ready to scale: in fact we found duplicates containing the same answer. This is the reason for the impossible super linear speedup. Therefore, we should fix the answer mechanism for version 0.847.