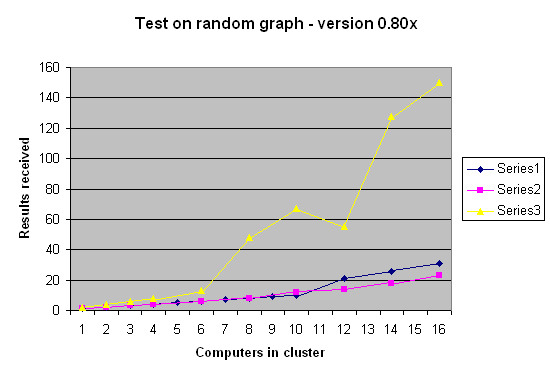

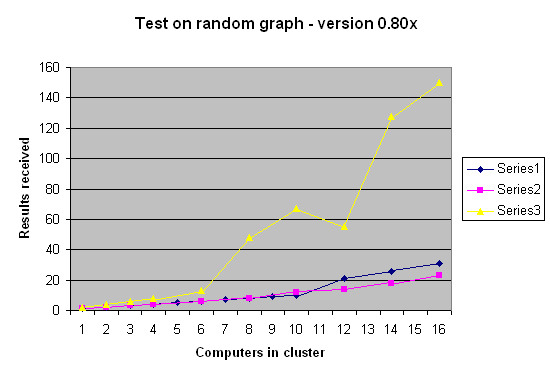

Figure 20: First big cluster on a random graph (January, version 0.80x).

First test was run using GPU 0.80x at the end of October in the room HG E27. Results clearly show that GPUs in this version do not scale linearly: the number of received results is growing exponentially in one of the three series; in that series, we found one crashed GPU. In the other series, we occasionally had a number of results greater that the number of computers involved, this was also incorrect.

A second test at the beginning of January failed because of a router crash in IFW C31. As support people at ETH said, GPU was not involved in the crash, fortunately.

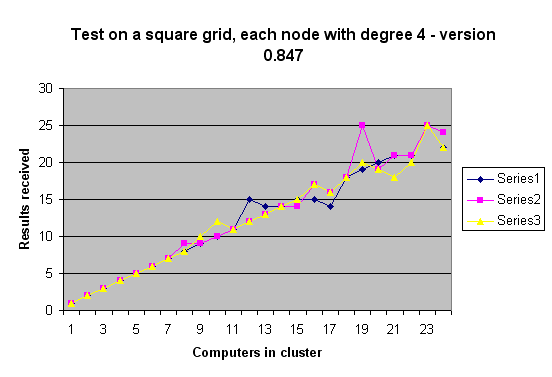

We run a last test with version 0.847. To reduce a little the duplicates problem, we chose a square grid where each node is connected to 4 neighbors. We subsequently added nodes, 21 were on a local network at ETH, Zürich, one in a student's house in the same city and one was in Russia from a user who occasionally connected to the network.

In this particular 2D-grid configuration, GPU scaled quite well; sometimes we had more results, sometimes less though there was no exponential growth of packets. GPU should scale up to a 8x8 grid with 64 computers. Larger grids are not possible because of the TTL constraint we saw in the line topology.

Figure 20: First big cluster on a random graph (January, version 0.80x).

Figure 21: Second big cluster test on a square grid (March, version 0.847)